For much of this summer I’ve been working remotely on Who’s On First. In early July I was in Korea giving a workshop on offline mapping as part of the Seoul Biennale of Architecture and Urbanism. From there I joined my wife Ellie at an artist residency in Taipei, where I’ve been working 12 hours ahead of my EDT colleagues. Along the way I’ve been collecting new places for WOF, since both Taiwan and South Korea are still rather sparsely populated with venues. This was my excuse to try using my software “in the field” and expand WOF’s venue coverage.

This post includes a fair share of obscure technical details. Perhaps it might be useful for someone working on a similar application. (And shout-out to the NYPL’s Bert Spaan who’s released a very interesting looking geotagging Leaflet plugin.)

Start where you are

I started with what I already had running on Boundary Issues, I just loaded it up on my phone. (Here is where I mention that the bespoke web-based editor I’m working on for Who’s On First is not quite ready for public use, but I’m blogging about it out in the open anyway. Drop us a line if you think you have something to add.) In addition to the general purpose WOF properties editor, I’ve been working on a pared down venue-specific interface. I used that simpler venues UI to try adding some new places I’ve enjoyed during my travels.

I am carrying two phones with me. One is an iPhone 5 with T-Mobile’s generous data roaming—a throttled, but functional, version of the mobile data service I get at home (at no extra cost). The other is a Samsung Galaxy S7 with Verizon service, which works great, but not outside the USA. I’ve been sticking to wifi hotspots on the Android phone while I’m traveling.

Slow bandwidth is very real

Instead of investing in a Korean or Taiwanese SIM card, I decided to embrace my slower bandwidth as a useful constraint. By sticking to my throttled roaming data, I’m getting a bit of a taste for what mobile bandwidth bottlenecks feel like day-to-day. My iPhone is limited to 3G networks even though everyone around me has fast LTE connectivity.

I’ve gotten better at planning ahead, making a deliberate effort to cache the map data (and routing results) I know I’ll need to navigate the city. In NYC, my network experience alternates between fast cable, fast mobile data (with a subway break for reading a book), and then back to a fast fiber connection. It’s easy to forget about all the slow connectivity that’s out here.

In testing out Boundary Issues, the first thing I learned is that mobile web slippy maps don’t really work so well on a bandwidth-constrained network. I would load up the “Add A Venue” page and just stare at the place where a map should appear. After waiting long enough, the screen would turn off to save battery and I’d mutter something rude under my breath.

The “headless” option

I decided to try a different approach. What if instead of relying on a mobile web page, I used the latitude/longitude metadata embedded in the photos I took? I could just take pictures and then process them when I got back to a faster internet connection. This had the added benefit of making data collection more enjoyable. Instead of staring down at my pocket supercomputer all the time, I’d just take a quick photo from the lock screen shortcut, and it’d go right back into my pocket.

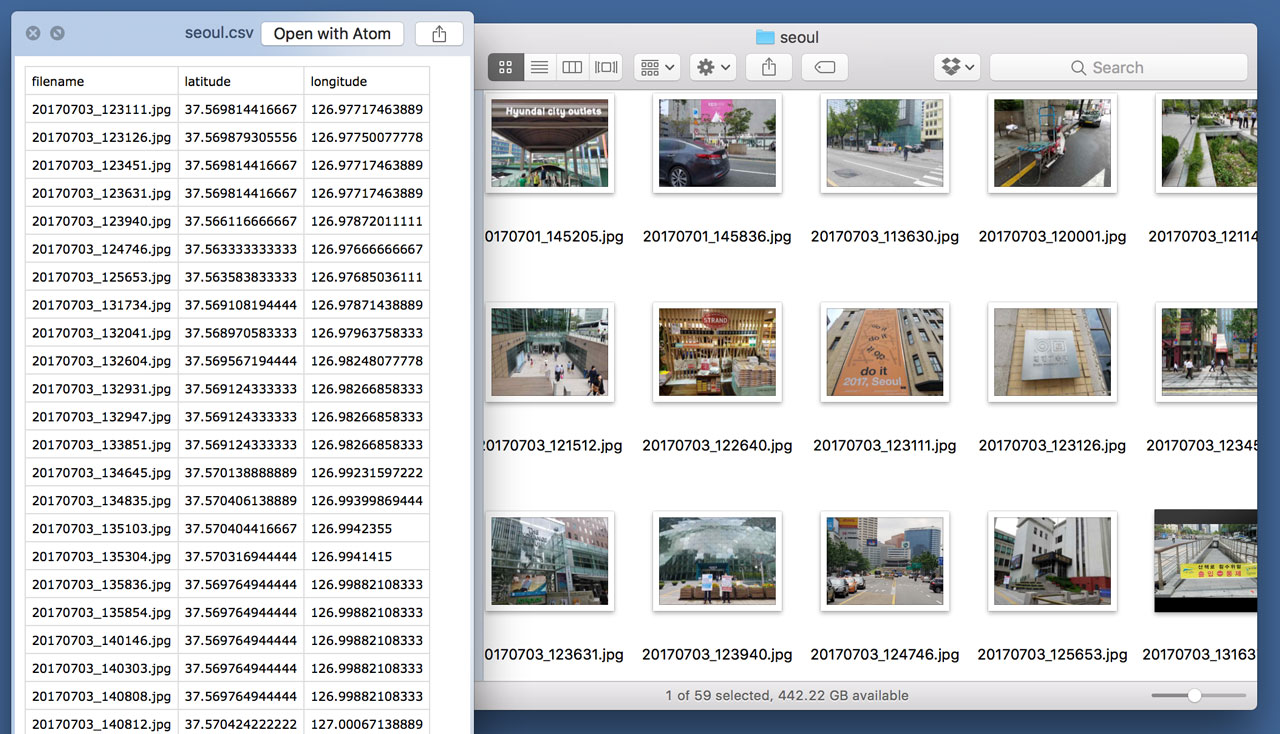

I took a bunch of photos around Seoul with each of my phones. This method was so much faster, and felt less battery intensive compared to my mobile web interface. Once I got back to my laptop, I downloaded the photos and ran them through a command-line script to convert a folder of images into a CSV file containing a list of filenames and latitude/longitude coordinates.

I took that CSV file and imported it into Boundary Issues, using the step-through venue importer. And it worked! The one flaw in this setup was matching up each filename from the CSV with its corresponding photo in order to remember the place names.

At first I opted for my S7’s higher quality camera. But then I noticed Android has a tendency to hold onto an outdated location compared to iOS. Now I prefer the lower-quality photos from my old iPhone, favoring its more consistently accurate geotags. (Perhaps there is some setting in Android to improve its accuracy that I’m missing.)

Skipping the CSV stage

After running a few CSV venue imports, I started to wonder if I could just put the visual cue I’d photographed on the page next to the extracted geotags. I continue to be astonished at the range of things JavaScript can do. As it turns out, you can import/cache JPEG binary data and extract EXIF tags without hitting any server-side code.

The newest incarnation of the geotagged importer is basically an HTML file input wired up to some fancy JavaScript that eventually uploads a GeoJSON WOF record into Boundary Issues. With some important caveats (discussed below), this approach lets you keep your geotagged photo on your own device(s). Boundary Issues inspects the geotags on the client-side, so it doesn’t need to store a copy of the photo itself.

At some point we may offer an opportunity for users to opt into a Creative Commons license, and associate their photos with WOF records, but that opens up lots of questions we aren’t yet prepared to address. For now Boundary Issues cares very narrowly about geotag metadata, only keeping a photograph (in localStorage) long enough for you to remember which place it depicts.

윤슬 or Yoonseul is a public art/public space installation in Seoul

EXIF tags contain multitudes

Working with photos straight off the camera has some quirks. Upon reading an image from an <input type="file"> you get a binary Blob (yes, that is the technical name) that contains:

- Technical metadata like width, height, tables needed to decode the image.

- A sequence of compressed bits—a “scan”—that represent the image. (In the case of “progressive scan” JPEGs there are multiple scans of increasing quality.)

- “Application-specific” metadata, including Exchangeable Image File Format (EXIF), including lots of detailed context (where/when/how it was shot) about the photo.

There are a great many things that EXIF tags can describe about a photo. The tags can tell you which model of camera produced the photo as well as details about the exposure settings like shutter speed and f-stop. In the case of (configured properly) smartphones, the EXIF tags also contain latitude/longitude/altitude metadata. I normally disable geotags on my phone camera for privacy reasons, but they’ve been very useful for gathering venue data.

Another important tag I inadvertently encountered is the EXIF Orientation mode. If you’ve ever seen a photo that someone posted online that looked upside-down, it’s because the image scan was stored in the upside-down order that the camera captured it, and the EXIF tag instructing the computer how to display the image right-side-up was ignored. Web browsers are still not so great applying that EXIF orientation mode (see also: my EXIF orientation test page).

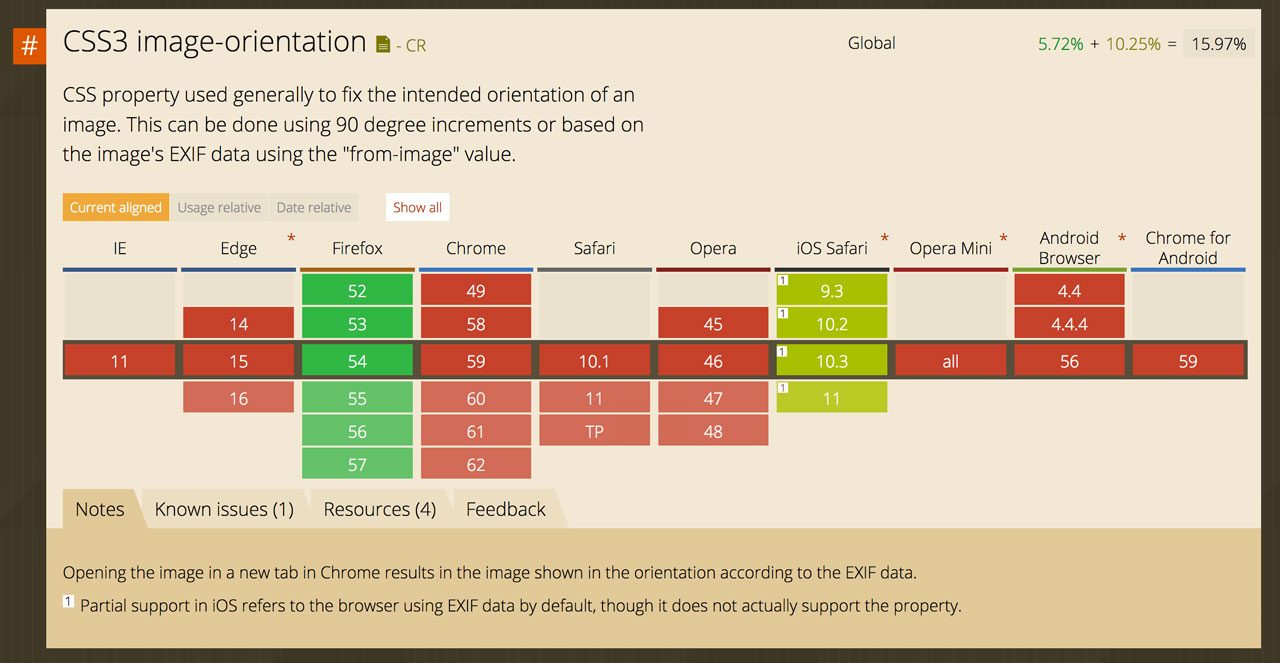

As it happens, there is a CSS3 image-orientation property for exactly this purpose. It even includes a magical sounding from-image setting, which sounds like it should fix things right up. Unfortunately nobody but Firefox supports this approach. It’s up to us web developers to rotate EXIF-oriented photos ourselves.

Compatibility is (usually) better than correctness

But wait, it gets worse! One platform, iOS, behaves differently than every other operating system (at least, so far as I’ve gathered). iOS arguably does the “right thing” with these tags, but it is the only case I know of where the EXIF tags are taken into account. Everyone else seemingly ignores the EXIF orientation entirely.

Let’s say you upload a photo you took in EXIF Orientation 3 (volume buttons up) from Mobile Safari on iOS. The resulting photo will look totally normal on your iPhone, because iOS decoded the EXIF Orientation and flipped the image around for you. Everyone else on every other platform (including Safari on macOS) will see an upside-down version. The best part is how it looks totally normal to the original poster, understandably baffled by the inevitable jokes in the comments about being in the opposite hemisphere.

One additional caveat I discovered is that iOS only compensates for the EXIF Orientation with <img> tags, ignoring the EXIF orientation of background images, like non-iOS browsers. My geotagging interface switches between <img> and background-image CSS, so this detail was just one more mystery to untangle along the way.

Taipei 101 as viewed from the 象山 Xiangshan Trail (aka Elephant Mountain).

Yep, CSS can fix it

The upshot of all this EXIF Orientation mode stuff is that working with photos that are upside-down or sideways is really annoying, making it hard to decipher whatever it was you were trying to photograph. It’s important for me to get this detail right, instead of holding my device sideways/upside-down to see the dang photo like a chump.

After detecting the EXIF Orientation mode in JavaScript and assigning a set of CSS classes, I used a transform property to rotate each image (or flip it) back into its intended position. Success! Except … in iOS, which earns a special browser sniff for not conforming. Without that special case, my CSS transform would flip an image, only to have iOS helpfully flip the photo back upside-down again (and then I was like (╯°□°)╯︵ ┻━┻).

If I was processing photos on the server-side, I would prefer rotating photos by rearranging the order of bits in the JPEG scan and stripping out the EXIF orientation tag altogether. Either jhead -autorot flipped.jpg or exiftran -ia rotated.jpg would neatly avoid the problem of client-side photo orientation.

Mobile telephones are really, like, a thing now

You may have noticed lots of people enjoy taking pictures with their mobile phones. One might say they’re more like network-connected cameras than phones at this point. This kind of had me wondering—what if I load up a Boundary Issues geotagging page on my camera/phone, and maybe press the <input type="file"> to launch the camera app—capturing a geotagged photo right from the (tiny) web page?

This is kind of getting back to where I started, except with a slightly different interaction than before. Instead of telling a map to gather my current location with the geolocation API, a photo could act both as a saved-for-later digital artifact and as a means to convey my current location into a WOF record.

In iOS you can totally launch the camera from a web page, and the JavaScript gets the same data Blob, but the EXIF metadata is mysteriously lacking geotags. Even if you’ve enabled location services for Mobile Safari, Chrome, and the Camera. Android does include geotags with photos taken with its version of the Chrome browser, but I’ve found that it occasionally omits them.

I’ve filed a bug report with Apple and created a minimal test case, but so far it’s hard to say why iOS omits geotags, or whether it’s likely to be fixed in a future release. I’ll update this post as I learn more.

Metadata is complicated

JPEG geotags, and the mixed salad of web technologies required to work with them, are tricky to get right. But the resulting user experience of capturing latitude/longitude coordinates via the camera is really seamless, once you get the settings right. This ease-of-use is burdened by the fact that geotagging metadata can be potentially dangerous if you inadvertently share your location on the open web, especially for at-risk populations. (There are helpful guides online for iOS and Android.)

The inertia that comes with phone camera configurations makes it easy to accidentally leave geotags enabled outside of the narrow data-gathering context. The small choices that developers and designers make about these interfaces (what are the defaults? when do you get visual cues that geotagging is enabled?) are shifting norms around what we’re comfortable sharing, with whom, and in what contexts. Software that nudges us toward oversharing will eventually exclude and endanger those of us who have reason to worry about our location privacy (I would argue that includes everyone, even if they don’t realize it yet).

I want Boundary Issues to use geotagged photos responsibly, ideally reminding each user to reconfigure location services once they are done gathering venue locations. Until iOS and Android provide some explicit mechanism for this, it might make sense to send an email or Slack message reminder after each geotagging session.

I’ve been struck by how hard it is to detect which photos carry geotagged metadata and how easy it is to accidentally share my precise location online. Both iOS and Android helpfully group photo albums by location, but lack safety features like geofencing or warning me when I’m about to share my location metadata. There are many opportunities for improving web-based applications as well. Us web developers need to call attention to users which of their photos carry geotags, especially before they upload them.

Onward

Despite some challenges working with EXIF metadata, it’s been fun to have a simpler mechanism for collecting new WOF venues. I now find myself photographing lots of signage, particularly those signs that include place names in both English and Korean/Chinese. I now explore the built environment thinking of which places I might want to capture for Who’s On First.

I’ve also been doing some experiments with using the Cordova mobile app framework to run a basic Tangram map. This geotagging interface has me thinking about how an offline-friendly geotagging app might incorporate the work Aaron has been doing on place bookmarking. In due time all of this will be ready enough to invite more users to try it out. Please get in touch if you’re interested!