Retail brands, chain stores, and other public and private venues have a vested interested in making sure people can find their brick and mortar locations. As a result, they frequently put the information on their website in the form of store locators or listings. Unfortunately, the data is almost never made available in a format that’s easily machine-readable.

The new All The Places project extracts this useful location information into machine readable geographic data with useful properties. It consists of hundreds of website spiders that crawl the web looking for information to extract, translate into a better format, and the code to collate it all together into a data dump suitable for publication.

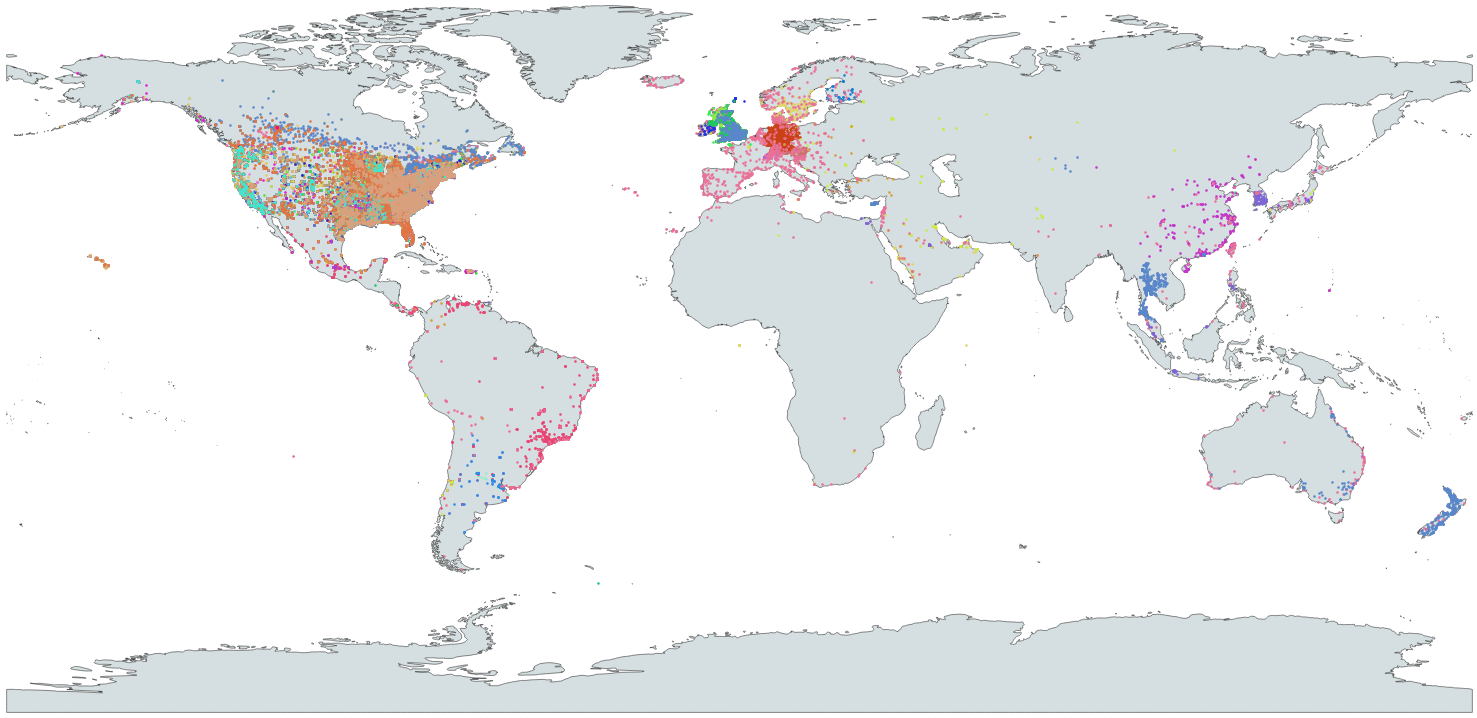

After all the spiders run, the result is posted to alltheplaces.xyz in GeoJSON format for public download. The last run from Dec. 21st, 2017 included 300,000+ lines (one for each store location) of newline delimited GeoJSON data. This data can then be used to build maps of your favorite stores, merged into gazeteers like Who’s On First, and integrated into search engines like Pelias.

All The Places is an independent open source project similar to OpenAddresses in scope and resides on GitHub. It’s easy to contribute to All The Places!

- Look through the issues to see if we’ve already tackled your favorite restaurant, salon, or clothing chain. If it’s not there, simply add it as an issue.

- Are you intersted in web scraping or excited to learn how to write spiders? Pick one of the open issues that are unassigned and read the documentation to get started writing your own spider.